Edit 2022-04-20: I did not see it right away, but there was a significant spike in the logs due to this post hitting the front page of Hacker News! I’m glad more people are interested in this. See the discussion here

Some people expressed interest in my org roam notes workflow. Setting this up has been an interesting project, teaching me about Emacs, org-mode and a little about web frontend. This post will describe my current workflow in as much details as possible.

Hopefully, you’ll learn to create an automated build script to publish your notes on the web and convert your org-roam note network into a graph like the one below.

Note graph screenshot

The notes

For the past years I have been trying to get into the habit of writing down new pieces of knowledge into notes. I am committed to this idea of keeping track of what I read and learn about. It can also be nice to come back to some of these notes to remind myself about a subject or make connections with something I learned.

Why org-roam?

When searching for the right way to organize and keep track of these notes, I ended up hearing about org-roam. I went through this great blog post by Jethro Kuan which details why he started org-roam.

The “roam” in org-roam refers to Roam Research, a standalone note-taking tool that people seem to praise. The tool is closed source for now and not cheap, making some people seek free alternatives. Org-roam seems like a great alternative, but not for everybody. Being an org-mode based tool makes it ideal for Emacs users, but may involve a steep learning curve for other users. If you’ve never heard about org-mode, you may want to read this introduction. The tool is incredibly useful, but it takes time to get used to it.

I haven’t thoroughly researched other alternatives, but Athens seems to fill the gap: it’s open-source, it looks user-friendly and not tied to an editor.

Personally, I prefer to stick with org-roam for three main reasons:

- I am already a heavy user of Emacs and org-mode and a lot of features and Emacs tools integrate well with org-roam

- I can export the files to my site (I detail how in Publishing)

- The source files are plain text (with org-mode markup). This means that even if Emacs and org-mode and all related software were to disappear, or more probably, if I decide to manage my notes with something else, I can still export all the content and translate it into whichever format I want

If you are interested in Emacs and would like to see my setup, I publish my dotfiles. You can also read the detailed and commented version of my org-mode configuration, or jump directly to the org-roam configuration.

Organizing knowledge in org-roam

The goal of org-roam is to create a web of notes linked between each other. By linking notes in a non-hierarchical way, one can make connections between topics and remember them without going through the hassle of creating a hierarchical tag system where it’s never clear if a note should belong to tag “A” or its parent “B”, etc.

There are plenty of different techniques for taking notes with a tool like this, but I don’t follow any particular one.

This is what an org-roam note looks like as raw source (Emacs makes this much prettier in practice):

:PROPERTIES:

:ID: 9fd080fa-a9a1-4d85-a6bf-682b41a67b72

:ROAM_ALIASES: "ML"

:END:

#+TITLE: Machine learning

- tags :: [[id:1b23dc1d-c276-4f95-866a-ad8e37dd544f][Artificial Intelligence]]

Machine learning is about constructing algorithms that can approximate complex

functions from observations of input/output pairs. Machine learning is related to

[[id:ce8b2ebe-f42e-4e4b-b0af-2a4563ef9fc0][Statistics]] since its goal is to make

predictions based on data.

Examples of such functions include:

- [[id:33ebc467-8c6c-43bc-b8b4-ba1971c75a37][Image classification]]

- Time-series prediction

- [[id:e72f23c2-d3a7-47df-87a6-5de09f820ecf][Language modeling]]

The first 5 lines are a header containing the title of the note, an alias (an alternative name I can use to search for the note within org-roam) and an ID (automatically generated with org-id).

The rest is org-mode markup with some links to other notes (they also have unique IDs).

Publishing

Sharing knowledge as much as possible is essential. I also think that it’s even better to share the learning process and the steps to acquiring that knowledge because this is what will help your brain make the right connections and remember what you wrote.

My notes are a constant work in progress. I will change them, enrich them or delete them as I learn. Let this be a disclaimer too: I might write notes that are not true or the sole result of my uninformed opinion.

Other people might have something to say about these thoughts and some have contacted me to discuss it. Interesting discussions have started from these notes.

Exporting the notes

The first step to publishing is to export the org-roam notes to the language of the web: HTML.

Simple HTML

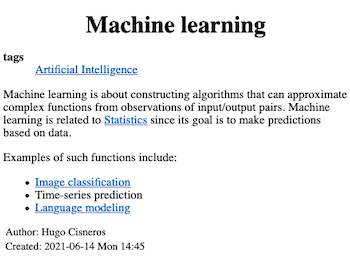

The simplest way is to use org’s embedded HTML export function (for example you can call M-x org-html-export-to-html when the note is open). For the note above, this will give you this simple HTML result:

Note exported to plain HTML

Plain HTML + styling is more than enough to get a decent site with all your notes.

Hugo

I use a static site generator called Hugo for my website, so I chose to go down the slightly more complicated path of exporting the notes to Hugo’s Markdown format and using Hugo to generate the final HTML. This allows for more flexibility and the possibility to automatically and generate complex pages like this one.

If you have ox-hugo installed, exporting a note is as simple as calling M-x org-hugo-export-wim-to-md on your note. You should read the ox-hugo documentation to configure your site source path and other useful parameters.

I automated the process with a few Elisp functions that I put in a Gist. The last function loops through a file containing a note path on each line. For each line, it exports the note and deletes the line from the file.

The graph

A great advantage of org-roam is that it stores all its useful information in a SQLite database. Don’t worry if you deleted it! Org-roam can rebuild it from the notes and all the links inside them. The database caches a lot of useful data but everything is still in the notes.

The database has a table for the nodes and a table for the links, so there isn’t much work to do to get a graph!

Read the org-roam DB

My org-roam database is at ~/.emacs.d/org-roam.db. Here is the code that extracts all the information to build my note graph from the DB with 2 SQL queries and constructs a Python graph with the networkx library.

SQL experts might scream at this.. these queries were the first that came to mind but there might be a better way of doing this! I’m dealing with small databases so performance isn’t a problem.

import networkx as nx

import pathlib

import sqlite3

def to_rellink(inp: str) -> str:

return pathlib.Path(inp).stem

def build_graph() -> nx.Digraph:

"""Build a graph from the org-roam database."""

graph = nx.DiGraph()

home = pathlib.Path.home()

conn = sqlite3.connect(home / ".emacs.d" / "org-roam.db")

# Query all nodes first

nodes = conn.execute("SELECT file, id, title FROM nodes WHERE level = 0;")

# A double JOIN to get all nodes that are connected by a link

links = conn.execute("SELECT n1.id, nodes.id FROM ((nodes AS n1) "

"JOIN links ON n1.id = links.source) "

"JOIN (nodes AS n2) ON links.dest = nodes.id "

"WHERE links.type = '\"id\"';")

# Populate the graph

graph.add_nodes_from((n[1], {

"label": n[2].strip("\""),

"tooltip": n[2].strip("\""),

"lnk": to_rellink(n[0]).lower(),

"id": n[1].strip("\"")

}) for n in nodes)

graph.add_edges_from(n for n in links if n[0] in graph.nodes and n[1] in graph.nodes)

conn.close()

return graph

Some graph analysis

This second script starts by constructing the graph with the function above. Then, it applies 3 graph algorithms:

- Compute the PageRank score for each vertex and store it as the

centralityattribute. This will be used to size the nodes - Do a clustering of the nodes into

N_COMclusters and store the community in the vertex attributecommunityLabel - Add

N_MISSING“predicted” links to enable discovery of new hidden connections between nodes. This usesnetworkx’s implementation of Soundarajan and Hopcroft’s method for link prediction

import itertools

import json

import sys

import networkx as nx

import networkx.algorithms.link_analysis.pagerank_alg as pag

import networkx.algorithms.community as com

from networkx.drawing.nx_pydot import read_dot

from networkx.readwrite import json_graph

from build_graph_from_org_roam_db import build_graph

N_COM = 7 # Desired number of communities

N_MISSING = 20 # Number of predicted missing links

MAX_NODES = 200 # Number of nodes in the final graph

def compute_centrality(dot_graph: nx.DiGraph) -> None:

"""Add a `centrality` attribute to each node with its PageRank score.

"""

simp_graph = nx.Graph(dot_graph)

central = pag.pagerank(simp_graph)

min_cent = min(central.values())

central = {i: central[i] - min_cent for i in central}

max_cent = max(central.values())

central = {i: central[i] / max_cent for i in central}

nx.set_node_attributes(dot_graph, central, "centrality")

sorted_cent = sorted(dot_graph, key=lambda x: dot_graph.nodes[x]["centrality"])

for n in sorted_cent[:-MAX_NODES]:

dot_graph.remove_node(n)

def compute_communities(dot_graph: nx.DiGraph, n_com: int) -> None:

"""Add a `communityLabel` attribute to each node according to their

computed community.

"""

simp_graph = nx.Graph(dot_graph)

communities = com.girvan_newman(simp_graph)

labels = [tuple(sorted(c) for c in unities) for unities in

itertools.islice(communities, n_com - 1, n_com)][0]

label_dict = {l_key: i for i in range(len(labels)) for l_key in labels[i]}

nx.set_node_attributes(dot_graph, label_dict, "communityLabel")

def add_missing_links(dot_graph: nx.DiGraph, n_missing: int) -> None:

"""Add some missing links to the graph by using top ranking inexisting

links by ressource allocation index.

"""

simp_graph = nx.Graph(dot_graph)

preds = nx.ra_index_soundarajan_hopcroft(simp_graph, community="communityLabel")

new = sorted(preds, key=lambda x: -x[2])[:n_missing]

for link in new:

sys.stderr.write(f"Predicted edge {link[0]} {link[1]}\n")

dot_graph.add_edge(link[0], link[1], predicted=link[2])

if __name__ == "__main__":

sys.stderr.write("Reading graph...")

DOT_GRAPH = build_graph()

compute_centrality(DOT_GRAPH)

compute_communities(DOT_GRAPH, N_COM)

add_missing_links(DOT_GRAPH, N_MISSING)

sys.stderr.write("Done\n")

JS_GRAPH = json_graph.node_link_data(DOT_GRAPH)

sys.stdout.write(json.dumps(JS_GRAPH))

The script writes the graph in JSON format to stdout with networkx’s JSON export function. It looks something like this (you can find the actual graph represented on my notes page here):

{

"directed" : true,

"graph" : {},

"links" : [

{

"source" : "012f1087-bae9-4bbf-b468-114169782b5b",

"target" : "2fc873e6-d61d-43e3-9dd1-635f54b4114f",

"predicted" : 0.676190476190476,

},

{

"source" : "012f1087-bae9-4bbf-b468-114169782b5b",

"target" : "89569187-fcdc-4ebf-8608-75bfb129e8c2"

},

...

],

"nodes": [

{

"centrality" : 0.0848734602948687,

"communityLabel" : 0,

"id" : "012f1087-bae9-4bbf-b468-114169782b5b",

"label" : "CPPN",

"lnk" : "cppn",

"tooltip" : "CPPN"

},

{

"centrality" : 0.0593788434729073,

"communityLabel" : 0,

"id" : "b495ae71-9ed6-4ef1-8917-45b490bca56f",

"label" : "Neural tangent kernel",

"lnk" : "neural_tangent_kernel",

"tooltip" : "Neural tangent kernel"

},

...

]

}

Some frontend with D3.js

You will find the JavaScript code for handling the D3 graph construction here. To create the graph I started from this D3 Observable tutorial on making force-directed graph. Getting something decent-looking is straightforward with D3.

The main SVG source where the graph is drawn is defined in the <div> with id main-graph here. There are two components in there: a filter definition and the main rectangle where the graph is drawn.

The filter is shown below. I use it for the tooltips when you point a vertex with your mouse. The tooltips are composed of two elements: the text and a background, that is a rectangle bounding box around the text with the text cut out (it’s what the filter does).

<defs>

<filter x="0" y="0" width="1" height="1" id="solid">

<feFlood flood-color="#f7f7f7" flood-opacity="0.9"/>

<feComposite in="SourceGraphic" operator="xor" />

</filter>

</defs>

Conclusion

That’s all I have for you. I realize this might look like a complicated bunch of scripts, but this setup works well for me now. Jethro Kuans’s dotfiles and Braindump source were a great source of inspiration. It notably helped for the Elisp part for which I have less experience.

Getting into the habit of taking notes has been a great experience for me. I no longer fear forgetting ideas or useful thoughts as long as I write them in my “second brain”.

I hope this post was informative, and it will help you get a nice note website up and running! Enjoy!

Emacs

Emacs