Adversarial examples in Reinforcement learning

Adversarial examples in Computer vision

Adversarial examples in NLP

A Python library for creating and using text attacks: TextAttack.

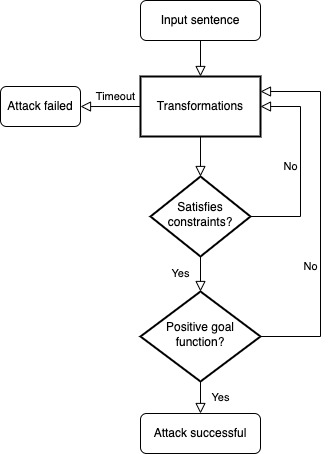

Figure 1: This diagram illustrates the standard flow of an adversarial attack on text data.

The three components of a text adversarial example:

- Goal function: This is a function that takes an original sentence, an attacked sentence, computes a score and the result of the attack (successful or not). In practice, this is a classification model trained on some specific dataset (sentiment analysis, fake news detection, etc.)

- Transformations: This is the set of text modifications that will be attempted in order to flip the result of the Goal function, there can be multiple transformations and they are attempted according to a general strategy.

- Constraints: There is a set of constraints that a attacked sentence must always verify. They ensure that the attack doesn’t change the original sentence’s content too much. The constraints can be a maximum number of perturbed tokens, a maximum word embedding distance, a part of speech tagging similarity or a distance within a sentence embedding space.