- tags

- Implicit neural representations, NeRF

- source

- (Chng et al. 2022)

- web

- https://sfchng.github.io/garf/

Summary

This paper introduces a positional embedding-free NeRF architecture which uses gaussian activation functions. These activation functions were introduced as part of Gaussian-MLPs in (Ramasinghe, Lucey 2022).

This alternative activation function enables GARF to model first derivatives of the target signal better than Positional embeddings MLPs (PE-MLPs) (Mildenhall et al. 2020; Sitzmann et al. 2020). It also overcomes the initialization issues with SIRENs (Sitzmann et al. 2020) without using a specific initialization scheme.

Comments

Implementation

We give a very simple implementation of this paper’s main idea on a 2D image to show how straightforward it is to use it.

import skimage

import matplotlib.pyplot as plt

import tensorflow as tf

import tensorflow.keras as keras

import tensorflow.keras.layers as kl

img = skimage.data.camera().astype('f4') / 256.0

plt.imshow(img, cmap='gray')

plt.savefig("~/img/garf1.png")

# Make a meshgrid

normalized_grid = (np.mgrid[:img.shape[0], :img.shape[1]] / len(img))

X = normalized_grid.reshape((2, -1)).T.astype("f4") * 2 - 1

X *= 32 # this scaling factor make the training easier

y = img.reshape((-1, 1))

def make_model(activation):

return keras.Sequential([

kl.InputLayer((2,)),

kl.Dense(300),

activation(),

kl.Dense(300),

activation(),

kl.Dense(300),

activation(),

kl.Dense(1),

kl.Activation(tf.nn.sigmoid)

])

# The gaussian activation function from the paper

activation = lambda: kl.Activation(lambda x: tf.math.exp(-x*x))

model = make_model(activation)

model.compile(loss='mse', optimizer='adam')

model.summary()

We get the following simple model description:

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

================================================================

dense_12 (Dense) (None, 300) 900

activation_12 (Activation) (None, 300) 0

dense_13 (Dense) (None, 300) 90300

activation_13 (Activation) (None, 300) 0

dense_14 (Dense) (None, 300) 90300

activation_14 (Activation) (None, 300) 0

dense_15 (Dense) (None, 1) 301

activation_15 (Activation) (None, 1) 0

================================================================

Total params: 181,801

Trainable params: 181,801

Non-trainable params: 0

________________________________________________________________

We now fit the model (with a large batch size since each batch is a single pixel).

model.fit(X, y, batch_size=1024, epochs=100)

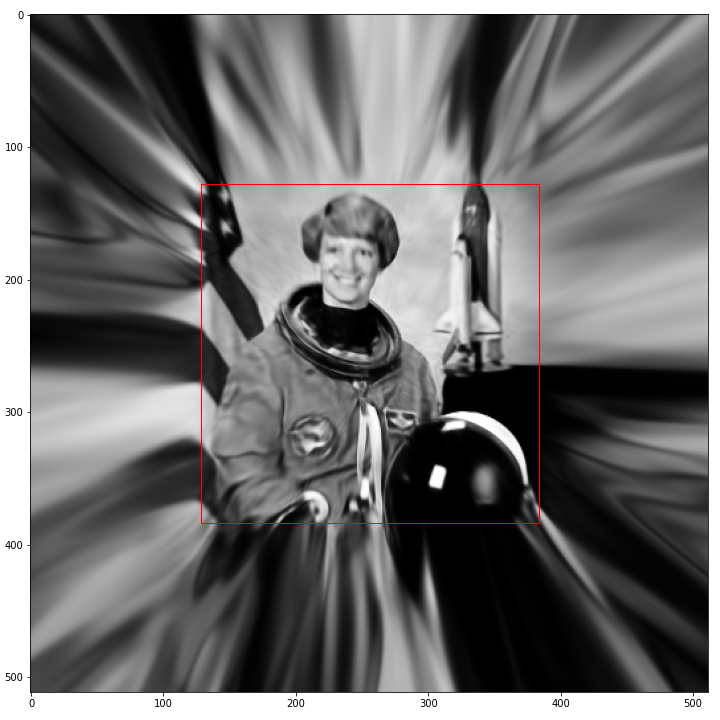

Finally we show the results for our fitted image as well as its neighbor “imagined” pixels.

# Scale the grid by a factor of 2 for predicting pixels outside

# of the original image

y_pred = model.predict(X * 2, batch_size=8192)

plt.figure(figsize=(10, 10))

plt.imshow(y_pred.reshape(img.shape), cmap="gray")

# Add a red square around the original image

plt.gca().add_patch(matplotlib.patches.Rectangle(

(128, 128), 256, 256, linewidth=1, edgecolor="r", facecolor="none"

))

plt.savefig("~/img/garf2.png")

The output is relatively good for the image itself but the impressive part is how natural looking is the rest of imagined pixels. And all this without any specific initialization scheme or positional embeddings.

Bibliography

- Shin-Fang Chng, Sameera Ramasinghe, Jamie Sherrah, Simon Lucey. . "GARF: Gaussian Activated Radiance Fields for High Fidelity Reconstruction and Pose Estimation". Arxiv:2204.05735 [cs]. http://arxiv.org/abs/2204.05735.

- Sameera Ramasinghe, Simon Lucey. . "Beyond Periodicity: Towards a Unifying Framework for Activations in Coordinate-mlps". Arxiv:2111.15135 [cs]. http://arxiv.org/abs/2111.15135.

- Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng. . "Nerf: Representing Scenes as Neural Radiance Fields for View Synthesis". Arxiv:2003.08934 [cs]. http://arxiv.org/abs/2003.08934.

- Vincent Sitzmann, Julien N. P. Martel, Alexander W. Bergman, David B. Lindell, Gordon Wetzstein. . "Implicit Neural Representations with Periodic Activation Functions". Arxiv:2006.09661 [cs, Eess]. http://arxiv.org/abs/2006.09661.