- source

- (Lu et al. 2021)

- tags

- Transformers

Summary

Different types of neural network architecture encode different kinds of biases. For example, convolutional neural networks perform local, translation-invariant operations and recurrent neural networks operate on sequential data.

One can use these biases in randomly initialized networks as a basis for interesting computations. This is on of the motivation for reservoir computing with echo-state networks, which uses fixed random recurrent neural network and a simple trainable linear transformation to perform complex computations. The intuition behind this is model is that interesting computations may be happening within the random RNN (and they are probably increasingly likely with bigger RNN). The linear layer can select useful computations and filter out the rest to give a result.

This paper invastigates something similar to that idea. However, instead of random models they investigate the capacity of pretrained models, more precisely pretrained transformers.

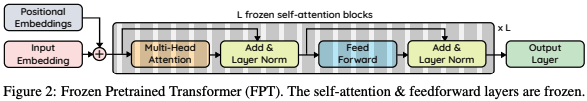

In their setup, all the weights of a transformer are frozen except for an input embedding layer, the positional embeddings, the layer norm parameters and a simple output layer. The architecture is shown in the Figure below taken from the paper:

This model is tested on a set of tasks:

- Bit memory: a task about memorizing bits in a sequence

- Bit XOR: a task about being able to XOR bits from two sequences

- ListOps: this task is akin to an elementary interpreter which has to perform operations on lists of integers

- MNIST: digit classification

- CIFAR-10: image classification

- CIFAR-10 LRA: a modified version of CIFAR where images are converted to grayscale and fed to the transformer with a token length of 1.

- Remote homology detection

The paper uses a range of setups to understand which combination of architecture/pretraining/etc. works the best.

Comments

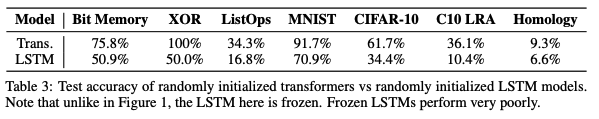

The part which is most interesting to me is not the main result of that paper but the results from table 3, shown below

It is very interesting to see a possible superiority of random transformers over random LSTM, which may also explain why the former is so much better at many tasks. However I couldn’t find information about the number of frozen parameters in that LSTM vs. the tested transformers. Also, because LSTMs don’t have layer normalization or positional embeddings, there have significantly less trainable parameters (80% less for a task like CIFAR according to numbers from the paper).

Maybe the authors factored this in by changing the number of parameters in the LSTM model but I couldn’t find that information in the paper.

A fairer comparison would have a transformer and LSTM with the same number of parameters. However this would make gradient computation in the LSTM very expensive compared to the transformer — which was partly invented to cope with that problem.

I also think that it is nice to find some evidence for a set of primitives being learned by language models and this may pave the way for unsupervised models that keep learning new things and reusing these elementary functions.

Bibliography

- Kevin Lu, Aditya Grover, Pieter Abbeel, Igor Mordatch. . "Pretrained Transformers as Universal Computation Engines". Arxiv:2103.05247 [cs]. http://arxiv.org/abs/2103.05247.

Loading comments...